Meaning Architecture - Part V

The Upstream Variable We’ve Been Missing

Across months of mapping failure signatures in AI systems, one pattern kept appearing:

drift doesn’t begin in the model’s logic - it begins upstream in the conditions that shape how meaning forms.

And here’s what’s now clear:

Across every domain -

model behavior, interpretive layers, decision-support pipelines -

a single upstream variable consistently predicts whether a system’s meaning stays coherent or begins to fracture.

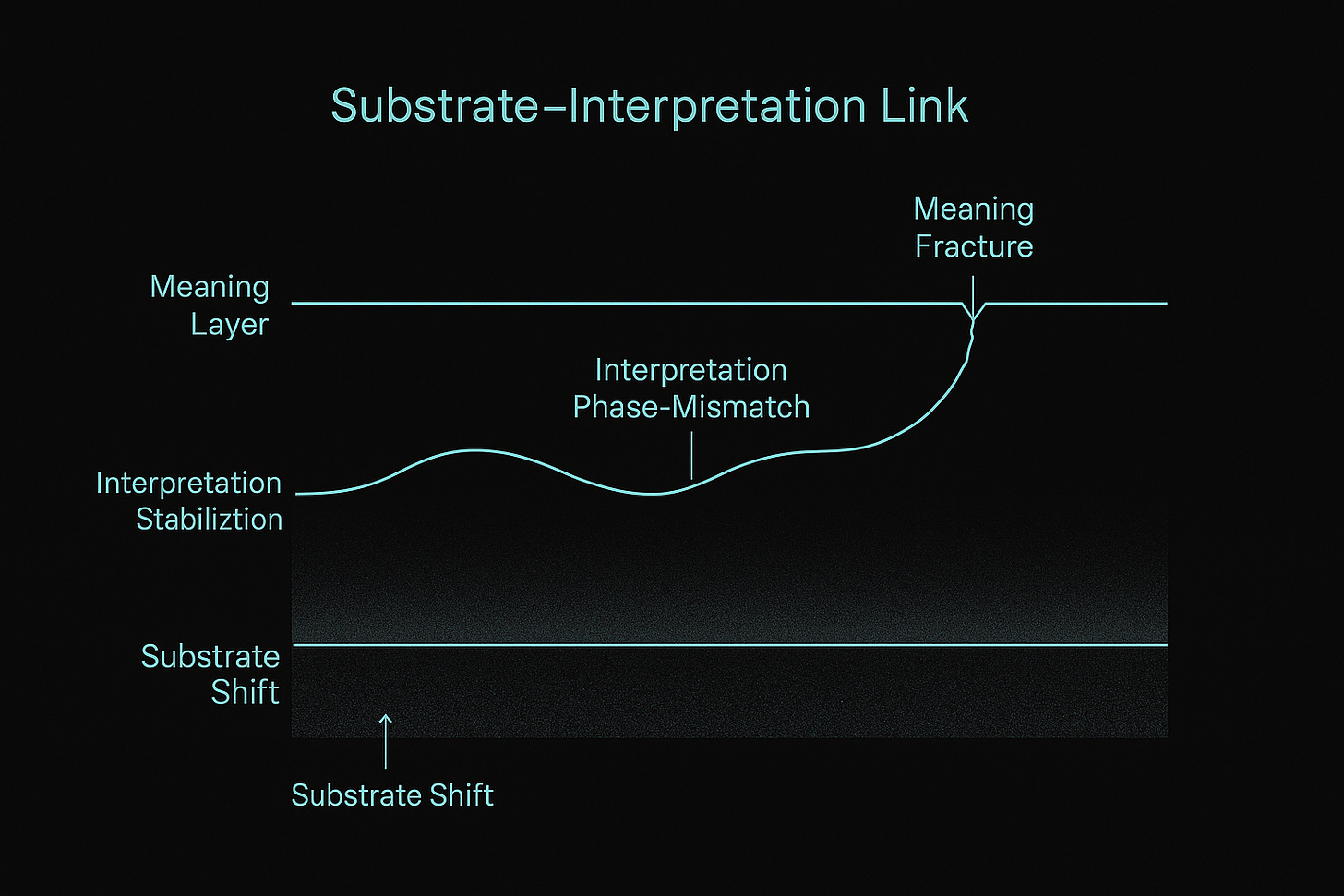

I’m calling it the Substrate–Interpretation Link.

It’s deceptively simple:

1. Small shifts in the substrate layer

2. Change how the system stabilizes early interpretations

3. Which determines whether meaning remains coherent or begins to drift

You don’t need the full math to see the impact.

You only need to watch when meaning begins to bend and compare it to what changed before the system ever produced an output.

I’ll be unpacking this in a structured way:

• How substrate shifts express as early phase-mismatch

• How that mismatch sets the stage for frame intrusion, prior drift, and inversion

• And why AI governance should be looking upstream long before metrics degrade

This is the part of the story that sits before drift.

The part we’ve been missing.

More soon.

Like