Meaning Architecture - Part IV

Stabilizing the Meaning Layer in AI-Enabled Operations

If drift begins upstream, stabilization must begin there too.

The meaning layer can be reinforced -

but only if we treat it as a strategic domain, not a byproduct of modeling.

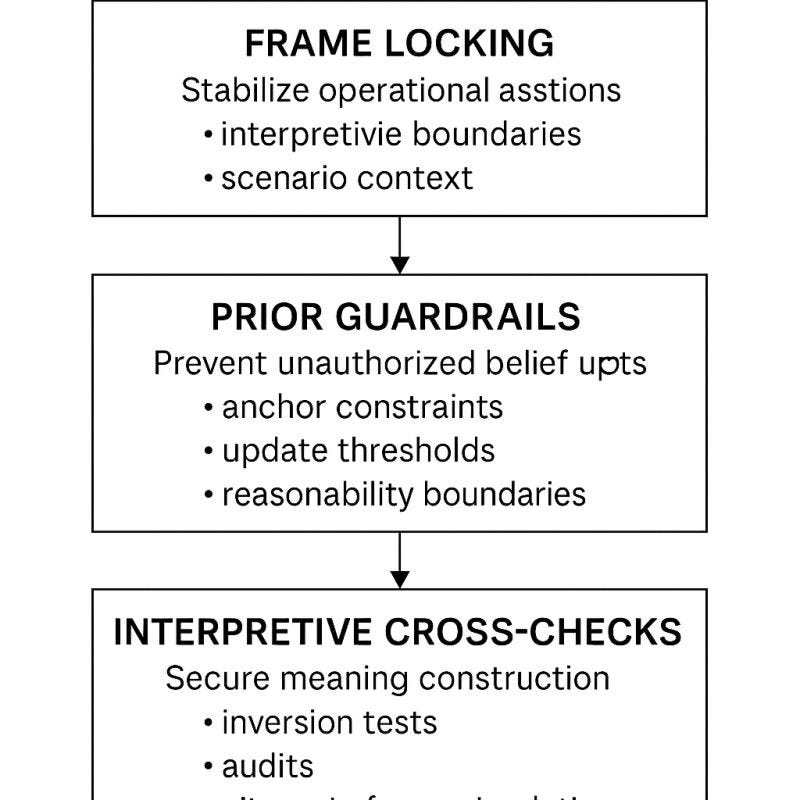

Here are the three countermeasures that prevent meaning drift before it fractures decision-making:

1. Frame Locking

Systems need enforced boundaries on what frame they are allowed to use when interpreting signals.

Frame locking stabilizes:

- scenario context

- interpretive boundaries

- operational assumptions

Without frame locking, systems remix reality every time a new pattern emerges.

This is how strategic drift begins.

2. Prior Guardrails

Models should not update their internal beliefs freely.

They need:

- update thresholds

- domain-anchored constraints

- sanity boundaries around what a “reasonable prior shift” is

Prior guardrails prevent AI from quietly redefining the world while outputs look clean.

This is where most organizations fail:

they don’t monitor belief change - only performance.

3. Interpretive Cross-Checks

The system must be forced to justify its meaning-making, not just its outputs.

Cross-checking includes:

- alternate frame simulation

- meaning-layer audits

- interpretive inversion tests

- high-signal/low-signal consistency checks

This reveals drift the moment it begins - not after decisions degrade.

You can’t stabilize decisions downstream if meaning collapses upstream.

The integrity of AI-enabled operations depends on securing:

- the frame

- the priors

- the interpretive rules

Meaning is the battlespace.

Stability is the objective.

AI doesn’t fail when outputs go wrong.

It fails when meaning moves.

Stabilize the meaning layer, and everything downstream holds.