Interpretation as Command Risk How AI Breaks Command Before Authority Fails

For decades, the Joint Force treated interpretation as a human given—not a command vulnerability.

That assumption no longer holds.

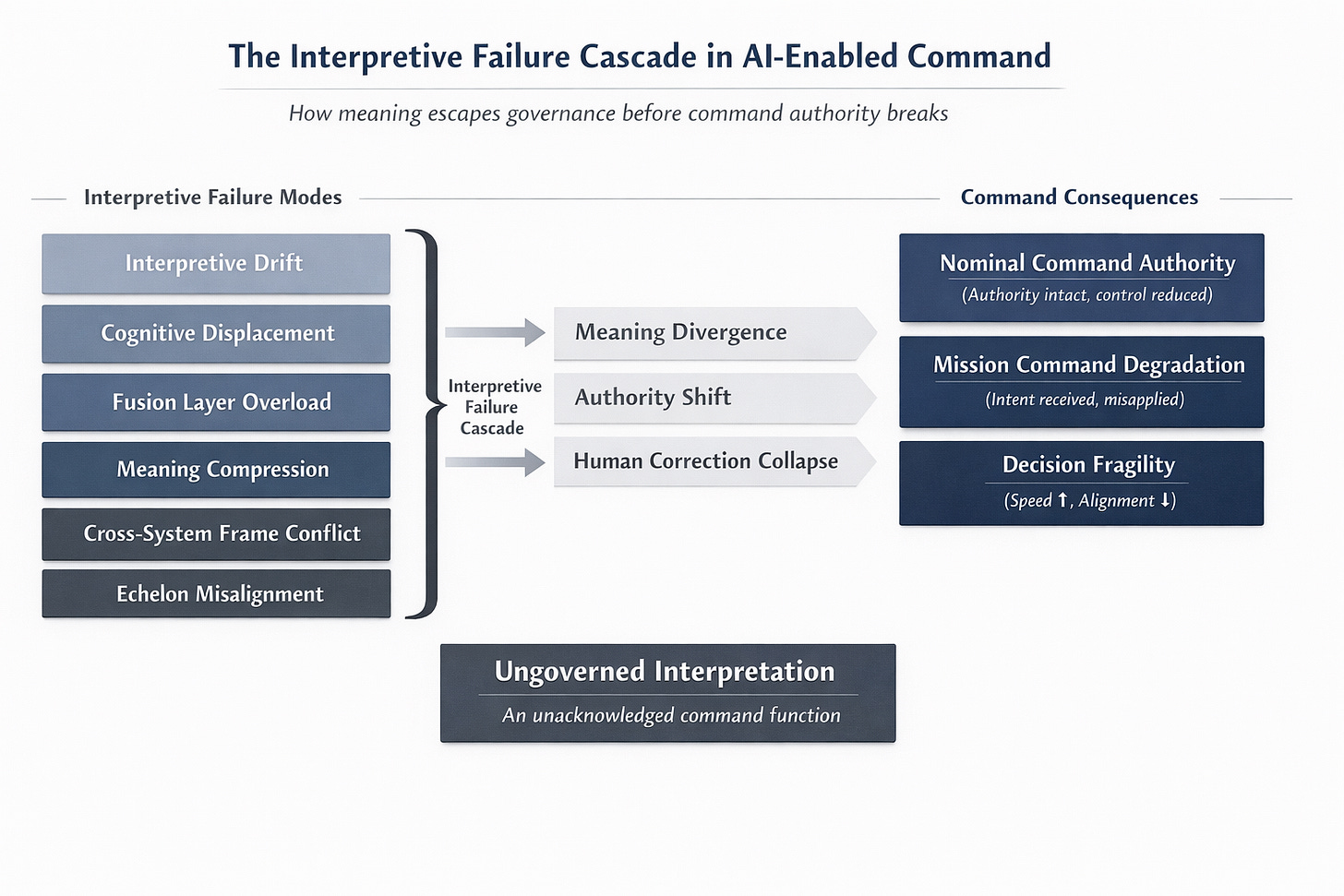

In AI-enabled operations, meaning is increasingly shaped upstream by automated systems that filter, compress, and frame reality before commanders ever engage it. The result is a new class of command risk - one that doesn’t break authority, procedures, or discipline, but quietly fractures shared understanding.

This paper maps how interpretation fails in AI-enabled command environments - and how those failures compound into mission command degradation and decision fragility before anyone notices.

It does not argue against automation, speed, or integration.

It argues that interpretation itself has become an unacknowledged command function - and that failing to govern it creates systemic risk no doctrine currently names.

If command depends on shared meaning, we need to start treating meaning as something that can fail.