Human–AI Coherence Synchronization Index (HACSI)

We keep asking the wrong question about human–AI teaming:

“Is the model accurate?”

Accuracy matters.

But it’s not what breaks missions.

What breaks missions is when humans and machines

stop seeing the same world.

That’s a coherence problem.

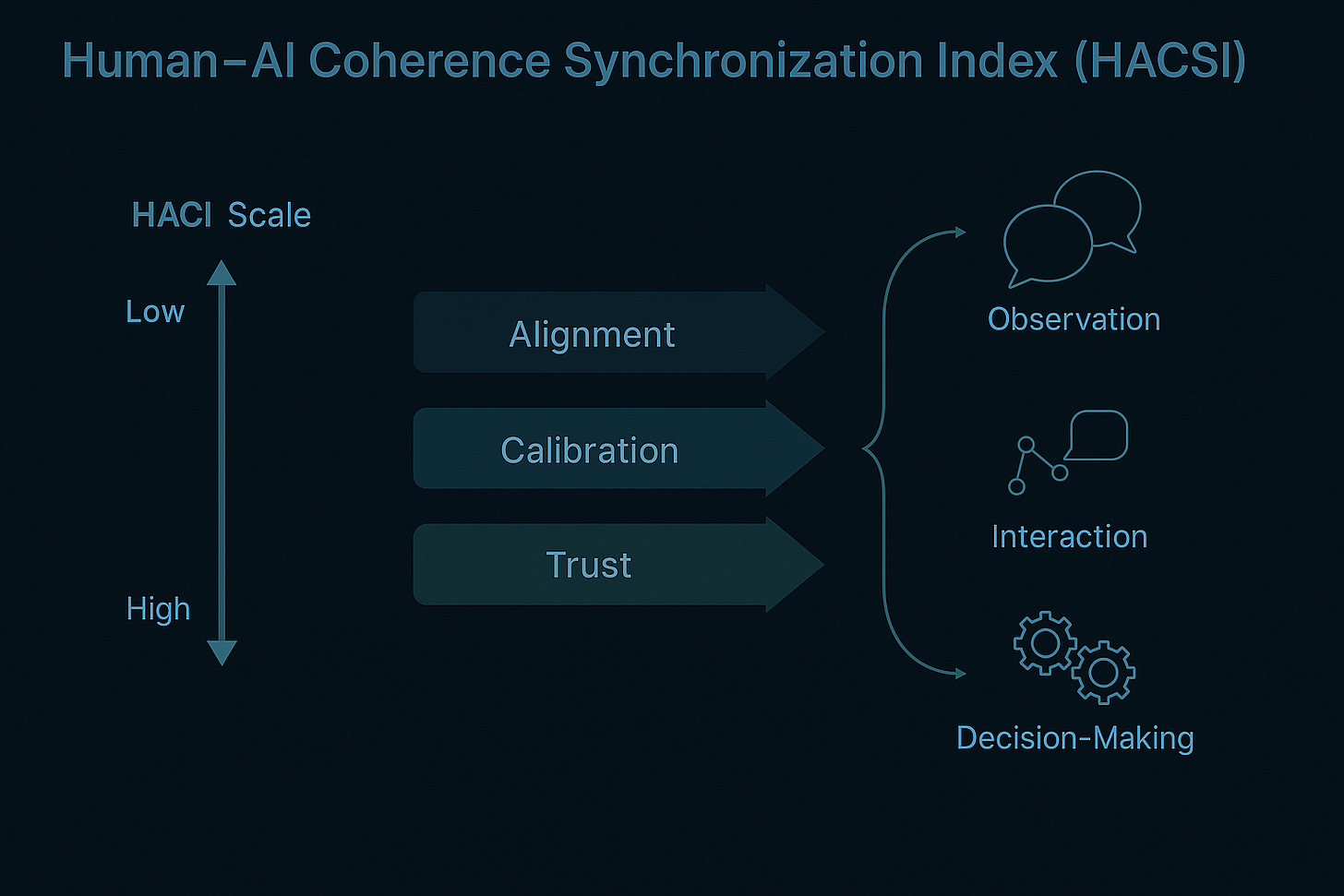

So I built the Human–AI Coherence Synchronization Index (HACSI).

HACSI doesn’t care how shiny the model is.

It measures one thing:

How closely the human’s interpretation of the battlespace

matches the AI’s interpretation over time.

On the HACSI map, you plot two scores:

- AI coherence

- human coherence

When they move together in a narrow band, you have synchronized clarity.

When they split, you get three dangerous zones:

• Over-Trust – AI looks coherent while the human is cognitively overloaded

• Human Override – human is stable but fighting a drifting system

• Global Confusion – both are degraded and nobody realizes it yet

This is the piece missing from most “human–machine teaming” discussions.

We don’t just need better models.

We need a way to see, in real time, when the human and the machine

have quietly stopped agreeing on what’s true.

HACSI is a way to make that visible.

Because if interpretation is the new center of gravity,

then human–AI coherence is no longer a soft topic.

It’s a survivability metric.