Constraint Before Capability - Why AI Programs Fail When Governance Is Added Last

Most AI governance fails before the system is even built.

Not because organizations don’t care about risk - but because governance is consistently added after capability is already in motion. Once a system exists, oversight no longer governs. It rationalizes.

This paper argues for a simple but uncomfortable principle: constraints must come before capability. Governance that arrives after deployment cannot prevent failure; it can only explain it.

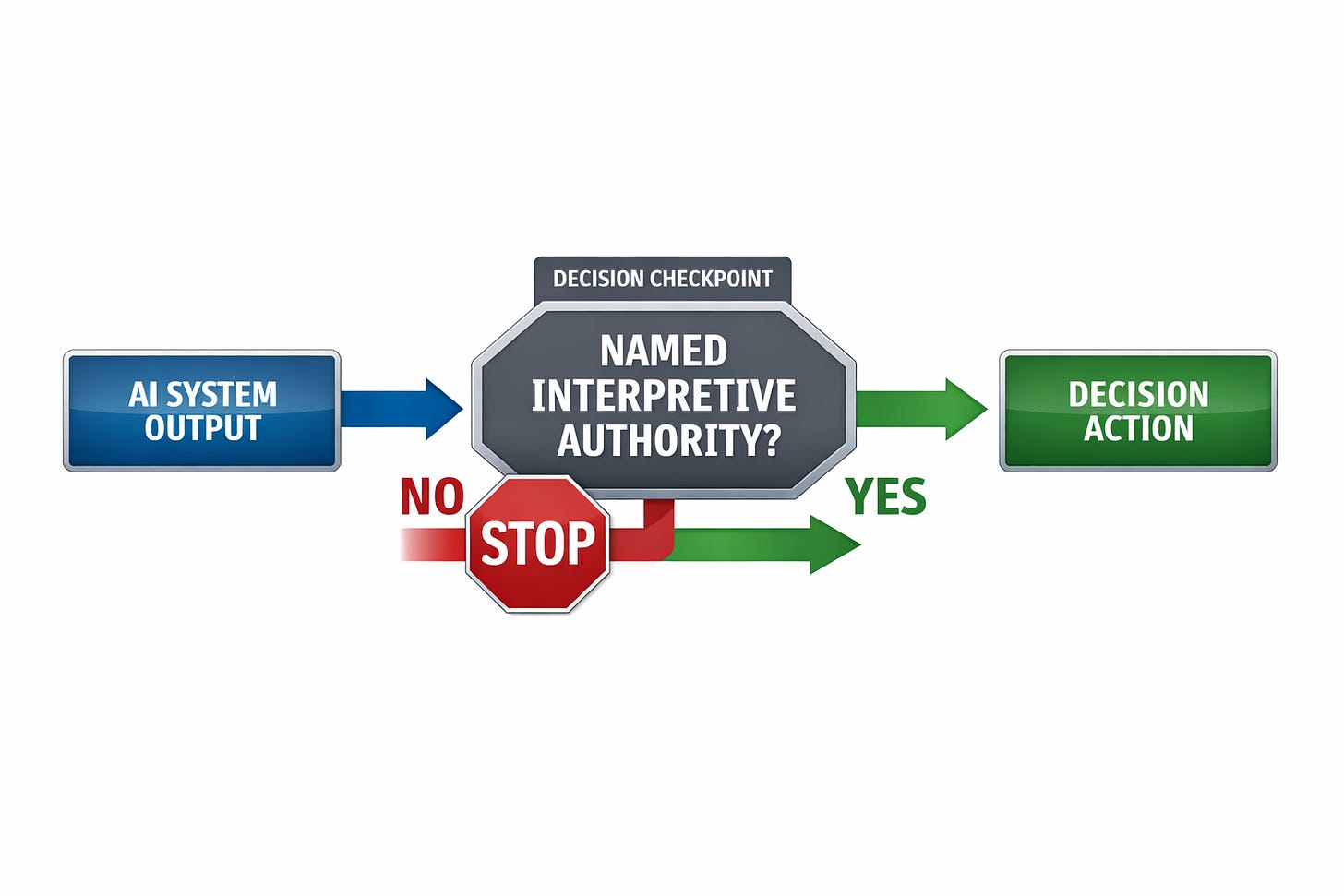

I outline why AI programs drift, why “we’ll govern it later” is structurally doomed, and why real governance requires the authority to say do not proceed before funding, development, or deployment.

This isn’t about tools, ethics checklists, or maturity models.

It’s about sequencing - and leadership.