Confidence Is Retrospective; Command Is Prospective Re-Establishing Decision Authority in Probabilistic Systems

A Brief Doctrine Paper

Most modern decision failures don’t come from bad data.

They come from misplaced authority.

Across AI-augmented environments, probabilistic confidence has quietly begun to function as command. Scores, thresholds, and “high certainty” indicators are treated as authorization - often without anyone explicitly deciding.

That substitution feels responsible.

It isn’t.

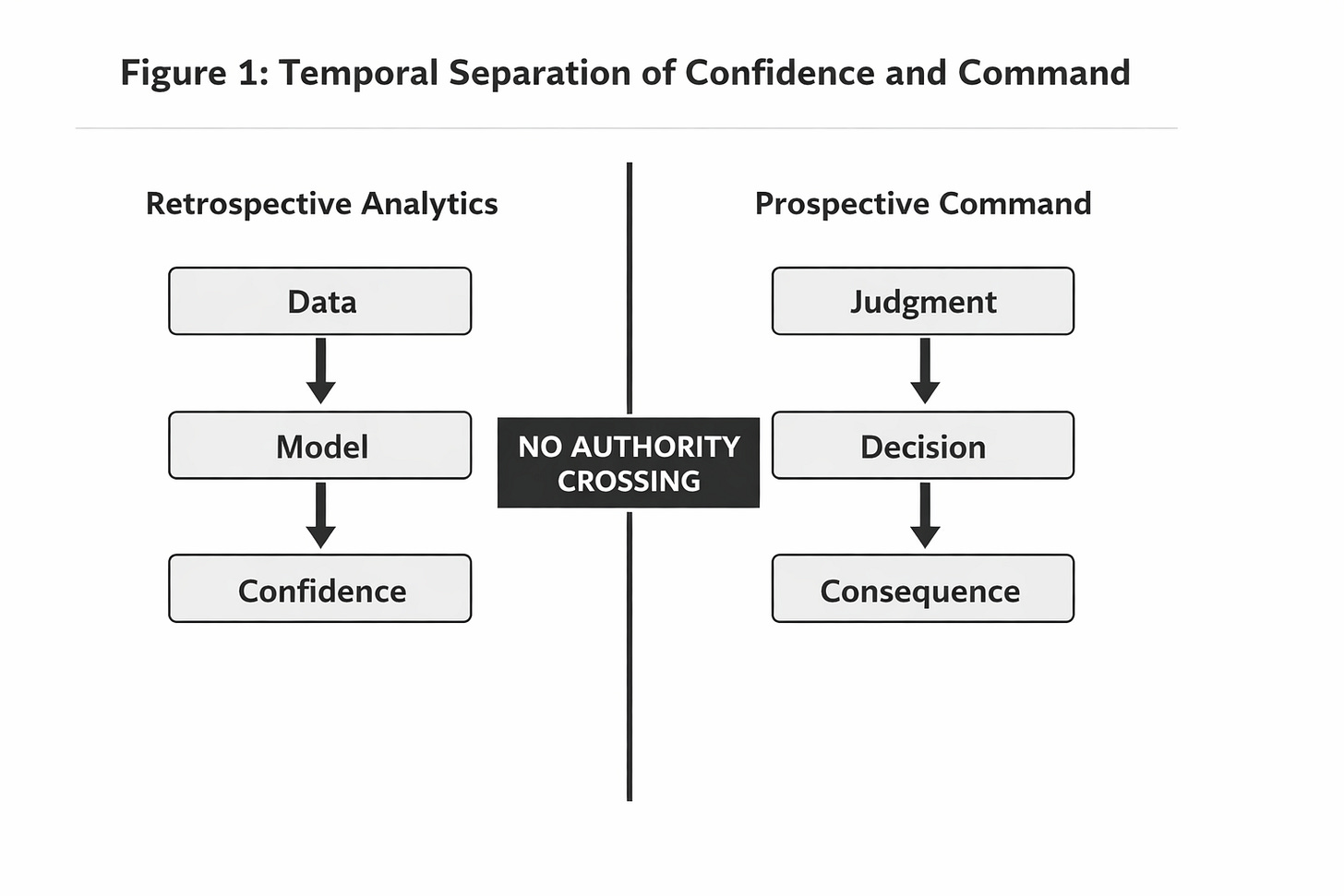

Confidence is retrospective.

Command is prospective.

This paper draws a hard doctrinal line between the two. It explains why probability can inform judgment but cannot assume responsibility, how systems quietly accumulate authority through design and language, and what breaks when decisions occur without a decision-maker.

This isn’t a critique of AI.

It’s a correction of how we govern it.

If you work anywhere decisions carry consequence - operations, policy, acquisition, or system design - this distinction matters more than model accuracy ever will.

Confidence explains the past.

Command answers for the future.