Autonomy Vs. Control - Who Pulls the Trigger?

Human-in-the-Loop Debates and Decision Latency

Executive Frame

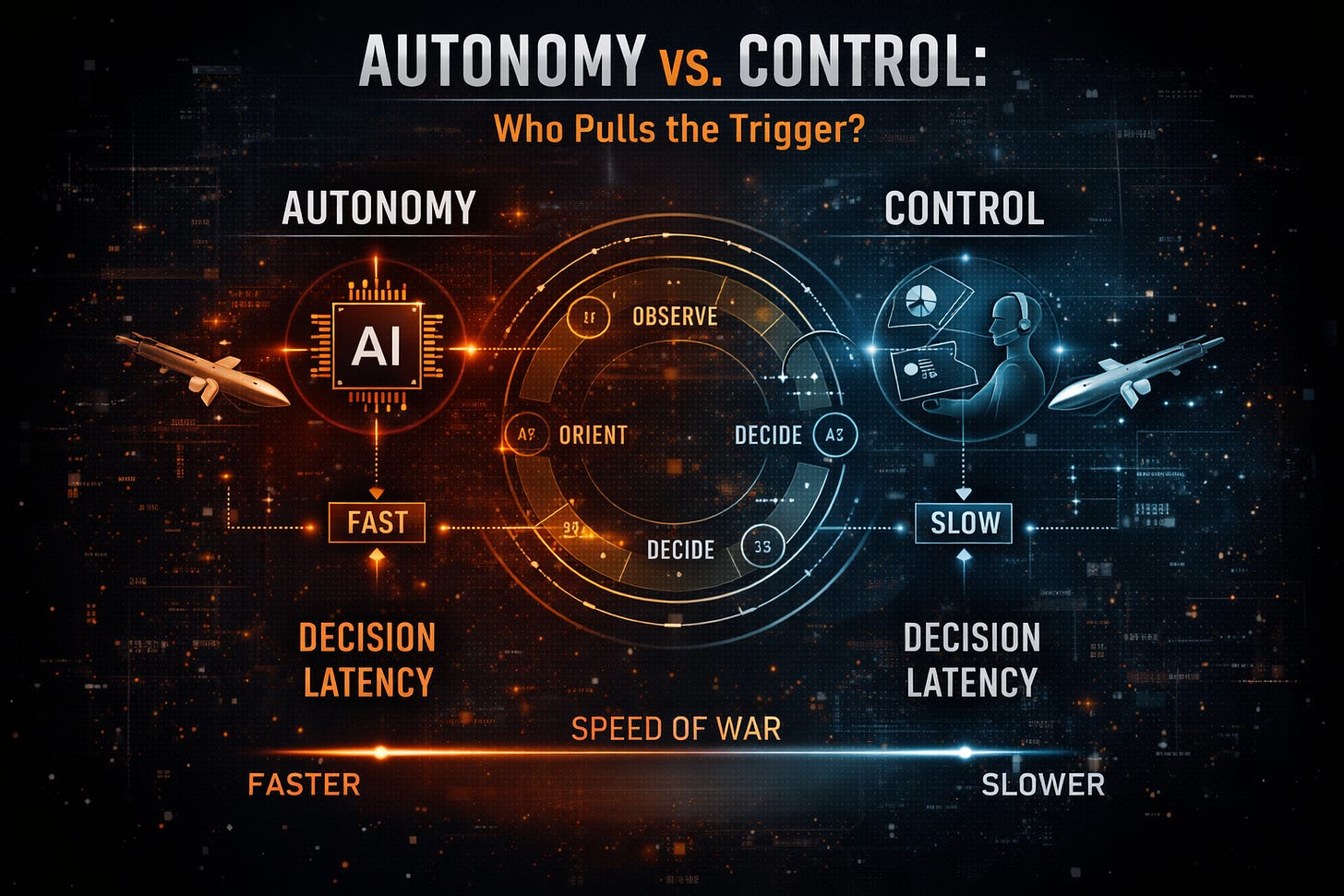

Every serious debate about AI in warfare eventually collapses into a deceptively simple question:

Who pulls the trigger?

The phrase sounds almost theatrical, but it masks the real issue. In modern systems, there often is no trigger in the traditional sense. There is a chain of sensor inputs, model inferences, confidence thresholds, rules of engagement, interface prompts, and time-compressed approvals. By the time a human “acts,” the decision has frequently already been made.

This is not a philosophical abstraction. It is an architectural fact.

The autonomy versus control debate is not about whether humans exist in the loop. It is about whether humans meaningfully govern it, or merely certify outcomes generated at machine speed.

This piece examines where control actually lives in contemporary systems, why latency has become the decisive factor, and how the human-in-the-loop framework is quietly failing under operational pressure.

The Comfort Myth of the Trigger

We inherited the trigger metaphor from mechanical weapons. A finger, a moment of intent, an irreversible act.

Algorithmic systems dissolved that clarity.

In modern architectures:

Targeting begins long before contact

Threat classification evolves continuously

Engagement windows are measured in milliseconds

Actions are queued, not pulled

The “trigger” is no longer a moment. It is a process.

When people ask whether a human or a machine pulls the trigger, they are already too late in the chain. The meaningful ethical and operational decisions occur upstream, embedded in system design, thresholds, and defaults.

The real question is not who pulls the trigger.

It is who sets the conditions under which pulling becomes inevitable.

Human-in-the-Loop: From Safeguard to Ritual

Human-in-the-loop (HITL) was introduced as a safeguard. The idea was straightforward: no lethal action without human authorization.

Over time, the concept has degraded.

In many deployed systems, HITL now looks like:

A confirmation button surfaced seconds before execution

A dashboard presenting machine-ranked options

A confidence score accompanied by a recommendation

This is not control. It is procedural reassurance.

When humans are inserted at the end of a pipeline they did not shape, at a tempo they cannot slow, using information they did not curate, the loop is human only in name.

Control is not presence.

Control is authority exercised in time to matter.

Decision Latency as a Weapon

Latency used to be a liability.

Now it is a weapon.

Modern conflict rewards systems that can compress the sense-decide-act loop faster than adversaries can respond. This has created immense pressure to automate not just execution, but judgment itself.

The result is a structural dilemma:

Slow systems preserve human judgment but lose operational relevance

Fast systems dominate the battlespace but sideline human control

There is no neutral middle ground.

Every millisecond added for deliberation increases survivability on one axis and vulnerability on another. Designers respond predictably: they pre-decide.

Defaults replace deliberation.

Automation absorbs responsibility.

The Quiet Shift from Authorization to Endorsement

One of the most dangerous transitions in modern systems is subtle.

Humans no longer authorize actions.

They endorse them.

Authorization implies genuine choice. Endorsement implies acceptance of a recommendation generated elsewhere.

When systems present:

A single “best” option

Framed urgency

Degraded alternative paths

human operators are nudged toward compliance, not judgment.

Over time, trust in the system becomes reliance. Reliance becomes dependency. Dependency becomes abdication.

No policy memo announces this shift. It emerges organically from performance incentives and operational tempo.

Control Lives in Architecture, Not Interfaces

Organizations often attempt to restore control by modifying interfaces.

More alerts. More warnings. More confirmations.

This fails because control is not an interface problem.

Control lives in:

Threshold design

Escalation logic

Fallback behavior

Authority routing

System defaults under uncertainty

If these elements are automated, human intervention becomes cosmetic.

A human cannot override a decision that has already propagated through dependent systems.

By the time a red button appears, the architecture has spoken.

The Illusion of Override

Override functions are frequently cited as proof of human control.

In practice, they are rarely usable.

Effective override requires:

Situational clarity

Time to reason

Confidence in consequences

Organizational permission to intervene

Under combat conditions, none of these are guaranteed.

Worse, overrides that are technically possible but operationally discouraged function as liability shields, not control mechanisms.

If exercising override is career-limiting, it is not real authority.

When Speed Becomes Moral Gravity

Speed exerts moral gravity.

The faster a system moves, the harder it is to resist its momentum. Humans adapt by trusting what they cannot slow.

This is how autonomy creeps in without declaration.

Not through rogue AI.

Through well-intentioned optimization.

Every reduction in latency shifts moral weight from humans to machines. Not because anyone chose it explicitly, but because the system made alternatives impractical.

The False Binary: Autonomy vs. Control

The debate is often framed as a binary choice.

Full autonomy or full human control.

This framing is misleading.

In reality, systems operate on a spectrum of decision delegation. The critical task is not choosing sides, but deciding which decisions may be delegated, under what conditions, and with what irreversibility.

Execution can be automated.

Detection can be automated.

Recommendation can be automated.

Authorization cannot be silently automated without ethical loss.

Designing for Human Authority Under Speed

If human control is to survive, it must be designed for speed, not against it.

That means:

Pre-committed decision boundaries

Explicit no-go zones encoded in architecture

Interruptible action chains

Clear attribution of authority at each stage

Human judgment must be exercised before time compression eliminates choice.

Ethics delayed is ethics denied.

Strategic Risk of Letting the Loop Close

When systems fully close the loop, escalation becomes easier, faster, and less accountable.

Political leaders lose plausible pause.

Operators lose discretion.

Institutions gain deniability.

This is not stability.

It is fragility disguised as efficiency.

Final Question

The future of conflict will not hinge on whether machines can shoot.

They already can.

It will hinge on whether humans retain meaningful authority over when they must not.

The most dangerous weapon is not an autonomous system.

It is a system so fast that no one can honestly say who decided to pull the trigger.

And when no one decides, responsibility disappears.