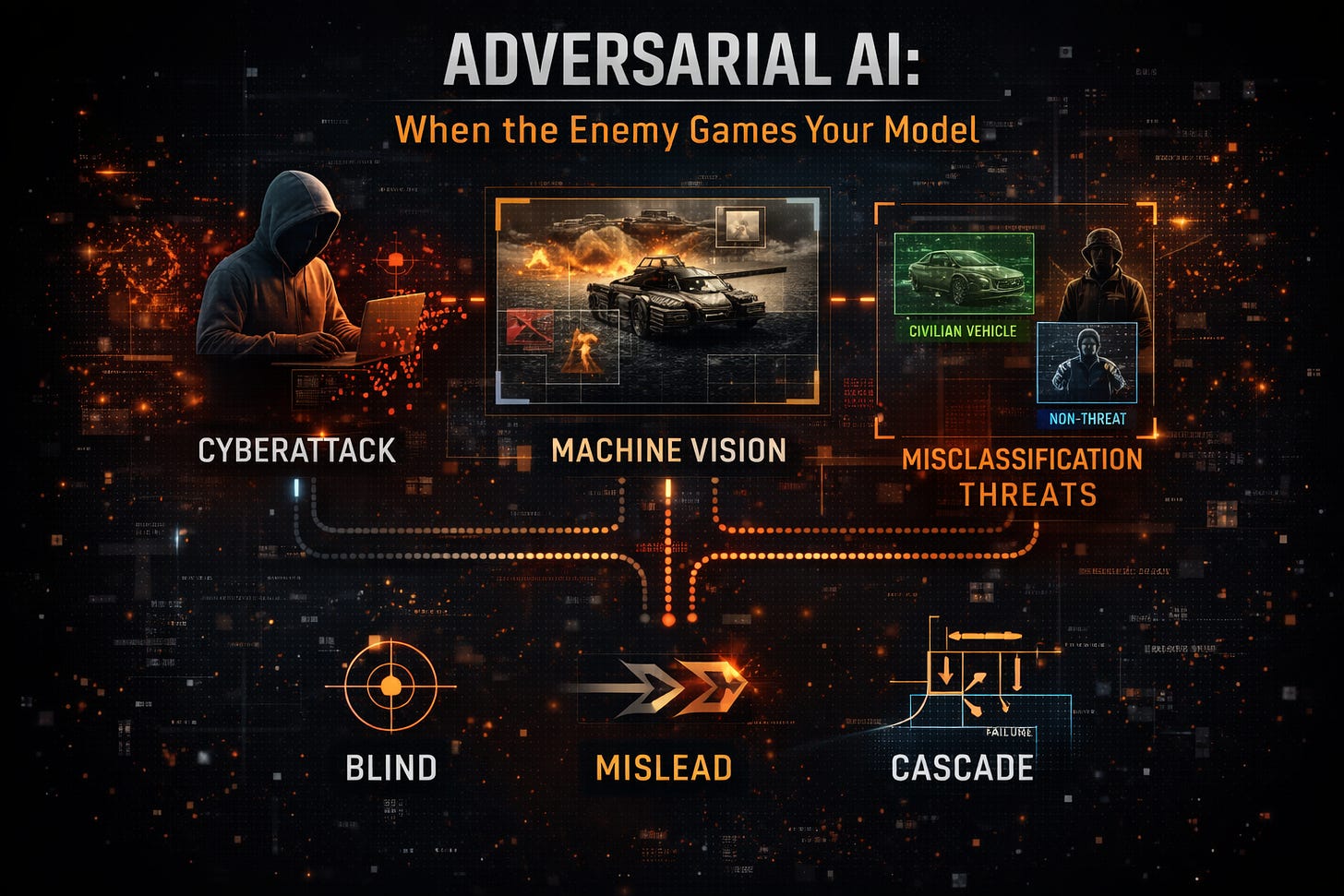

Adversarial AI - When the Enemy Games Your Model

Cyberattacks Against Machine Vision and the Threat of Misclassification

Executive Frame

The most dangerous cyberattacks no longer target networks.

They target perception.

As military and security systems increasingly rely on machine learning to see, classify, and prioritize the world, adversaries have discovered a quieter, more devastating attack surface: the model itself.

Adversarial AI is not about breaking systems. It is about misleading them.

When perception is automated, deception no longer needs to fool humans. It only needs to fool math.

This essay examines how adversarial attacks against machine vision work, why misclassification is a strategic weapon rather than a technical bug, and what it means for modern conflict when the enemy can reshape what your systems believe to be real.

The Shift From Cyber Intrusion to Cognitive Exploitation

Traditional cyber conflict focused on access.

Steal data. Disable systems. Exfiltrate secrets. Deny service.

Adversarial AI operates differently.

It assumes the system is functioning exactly as designed.

The attack occurs not by breaching the perimeter, but by feeding the system inputs it was never designed to doubt.

No malware required.

No zero-day exploit.

Just carefully constructed reality.

This is not hacking infrastructure.

It is hacking interpretation.

Why Machine Vision Is the Primary Target

Machine vision systems are particularly vulnerable because they:

Operate at scale

Act at machine speed

Depend on statistical generalization

Sit upstream of lethal and strategic decisions

From ISR platforms and autonomous vehicles to targeting systems and biometric identification, vision models increasingly mediate what is seen and acted upon.

If vision fails, everything downstream fails cleanly, confidently, and catastrophically.

Adversaries understand this.

So they do not attack the shooter.

They attack the eye.

Misclassification Is Not Error. It Is Effect.

Engineers often describe adversarial examples as edge cases.

Noise. Artifacts. Academic curiosities.

This framing is dangerously naïve.

Misclassification in an operational system is not a bug.

It is an outcome.

A system that confidently labels a tank as a truck, a civilian as a combatant, or an obstacle as empty space is not merely wrong. It is decisive in the wrong direction.

Unlike human error, machine error scales instantly and uniformly.

One exploit, replicated everywhere.

How Adversarial Attacks Against Vision Work

Adversarial attacks exploit the gap between human perception and model perception.

To a human, an image may appear unchanged.

To a model, it is radically altered.

Common attack vectors include:

Adversarial Perturbations

Small, often imperceptible pixel-level changes that cause a model to misclassify with high confidence.

The image looks the same.

The decision flips.

Physical-World Attacks

Stickers, patterns, paint schemes, or shapes applied to real objects that persistently fool models across angles and lighting conditions.

No cyber access required.

Reality becomes the payload.

Data Poisoning

Corrupting training or update data so the model learns incorrect associations over time.

The system degrades quietly, believing it is improving.

Model Inference Attacks

Probing deployed systems to infer decision boundaries, then crafting inputs that reliably trigger failure modes.

Reconnaissance precedes exploitation.

Confidence Is the Real Vulnerability

The most dangerous aspect of adversarial misclassification is not inaccuracy.

It is confidence.

Machine learning systems do not express doubt the way humans do. They output probabilities that appear authoritative, especially when wrapped in polished interfaces.

A wrong answer with high confidence is operationally worse than uncertainty.

Humans hesitate when unsure.

Machines act.

And operators tend to trust machines that have been historically reliable, even when conditions change.

This is how adversarial success compounds.

Cascading Failure Across the Kill Chain

Vision models rarely operate alone.

They feed into:

Targeting systems

Threat prioritization

Command-and-control dashboards

Autonomous navigation

Engagement authorization

A single misclassification upstream propagates downstream without friction.

By the time a human reviews the output, the context that would enable skepticism is gone.

The system has already framed reality.

This is not localized failure.

It is systemic distortion.

Adversarial AI as Strategic Doctrine

State adversaries are not experimenting with adversarial AI.

They are institutionalizing it.

Why?

Because it is:

Cheap relative to kinetic systems

Difficult to attribute

Easy to scale

Hard to deter

A nation does not need superior sensors if it can poison interpretation.

It does not need better weapons if it can induce hesitation, misfires, or false confidence in the enemy’s systems.

Adversarial AI is asymmetric warfare against perception.

The Myth of Model Robustness

The common response is to harden models.

Adversarial training. Defensive distillation. Input sanitization.

These measures help.

They do not solve the problem.

For every defended boundary, new attack surfaces emerge. Robustness improvements often trade accuracy for fragility elsewhere.

More importantly, they assume the model is the correct locus of defense.

It is not.

The system is.

Defense Requires Architectural Thinking

Effective defense against adversarial AI is not about perfect classification.

It is about containing the consequences of being wrong.

This requires:

Multi-model redundancy with disagreement detection

Context-aware validation beyond vision alone

Explicit uncertainty handling

Human escalation when confidence spikes abnormally

Continuous red-teaming of deployed systems

The goal is not to prevent all misclassification.

That is impossible.

The goal is to prevent misclassification from becoming decisive.

The Human Role: Adversarial Skeptic

As models become easier to fool, human operators must be trained not just to use AI, but to distrust it intelligently.

This is counterintuitive.

We train people to rely on automation, then ask them to override it under stress.

Adversarial environments invert that logic.

Humans must learn to recognize when systems are being manipulated, not just when they are malfunctioning.

This is a cognitive skill, not a technical one.

Strategic Implications

Adversarial AI changes the balance of power in subtle but profound ways:

ISR superiority becomes fragile

Automation increases risk under deception

Attribution becomes murky

Escalation control weakens

In such an environment, the side that assumes its models are seeing truth will lose to the side that assumes they are being lied to.

Final Thought

The future of conflict will not be decided solely by who has the best models.

It will be decided by who understands that models can be deceived, plans for that deception, and refuses to grant machine perception unquestioned authority.

When the enemy games your model, the question is not whether your AI failed.

It is whether your system was designed to survive being wrong.

Because in adversarial AI, the deadliest attack is not the one that shuts your system down.

It is the one that convinces it to act with absolute confidence - in error.