A Cognitive Architecture for Command and Control in AI-Enabled Operations

Every command knows how to protect networks.

Every command knows how to protect data.

Almost no command knows how to protect meaning.

In AI-enabled operations, systems can be compliant, explainable, and functioning exactly as designed - and command can still fail.

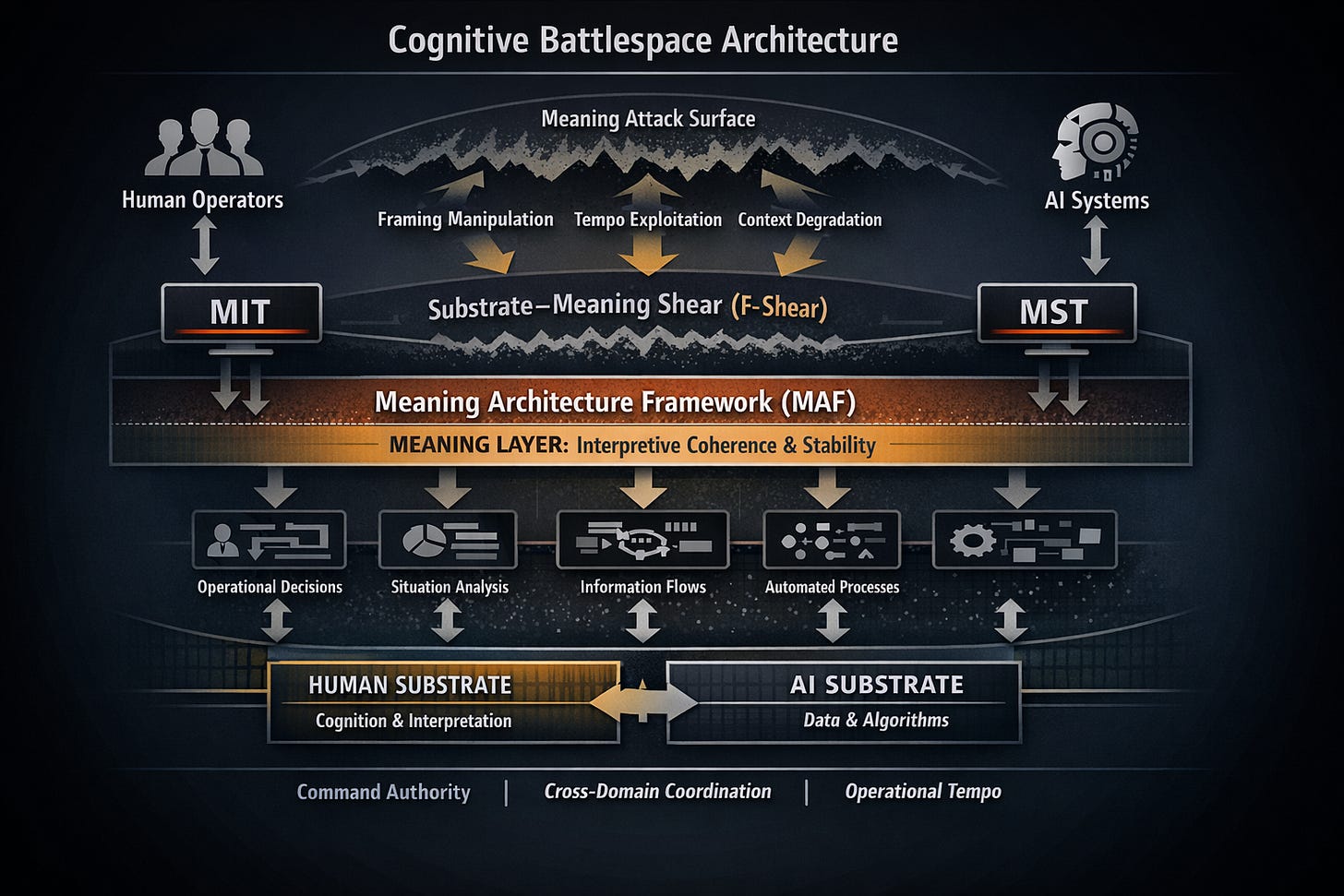

This paper, Meaning Is the Battlespace, introduces Meaning Architecture: a doctrine-level approach to making interpretation observable, governable, and defensible in real time. It reframes command risk away from tools and ethics alone and toward the meaning layer - where intent, context, tempo, and trust actually determine whether decisions cohere or collapse.

The paper presents:

- operational thresholds for meaning stability

- models of how human–machine interpretation diverges under tempo

- early warning indicators of meaning failure

- command interventions that work before collapse

- and a future view of command as interpretive governance in an AI-native battlespace

This is not an AI ethics paper.

It’s not a policy memo.

It’s a command architecture for a world where speed is cheap, but meaning is fragile.

If AI is accelerating faster than doctrine, then meaning - not compute - is now the limiting factor of command.